What’s real and what’s not: A novel tool to protect Images from AI Manipulation

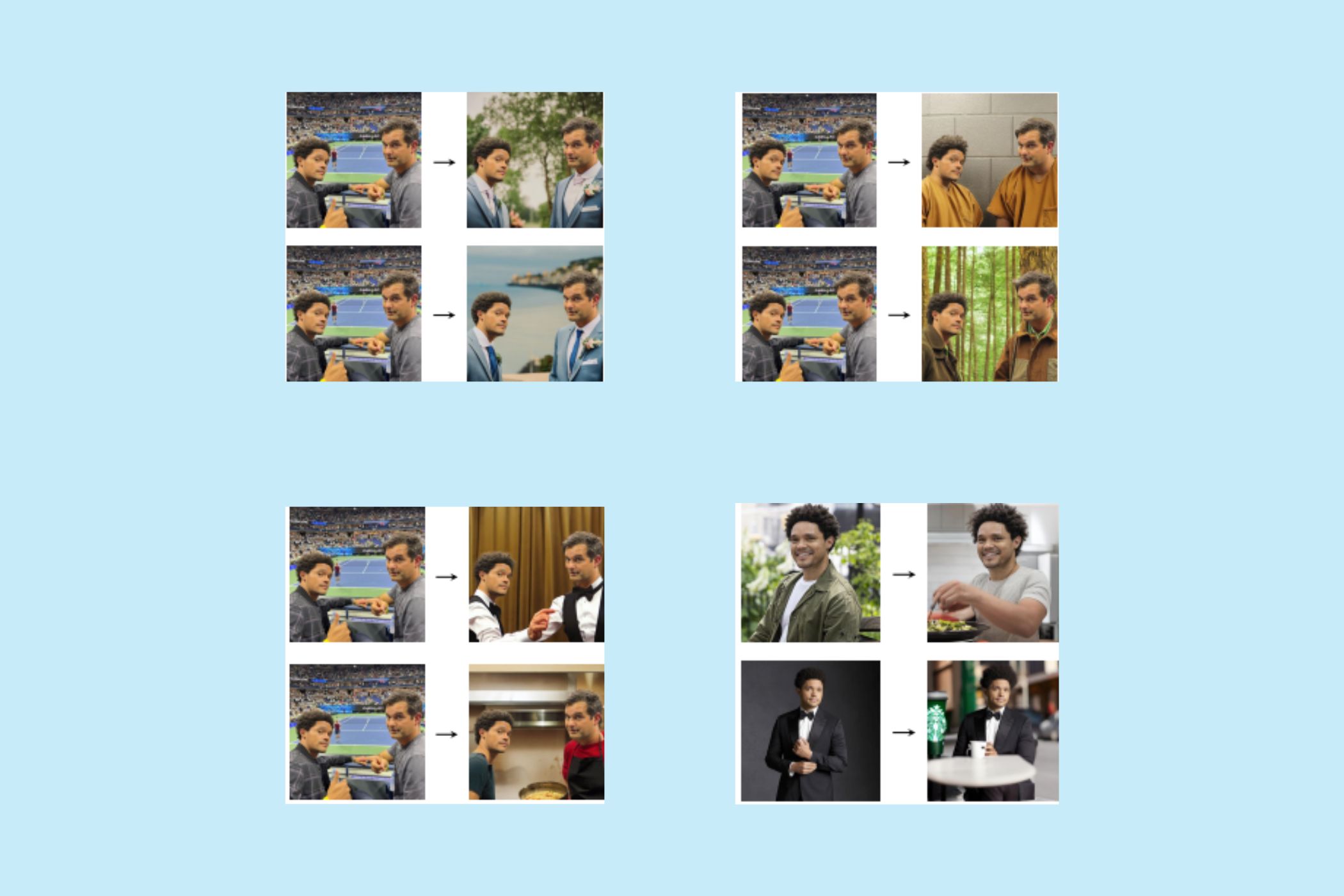

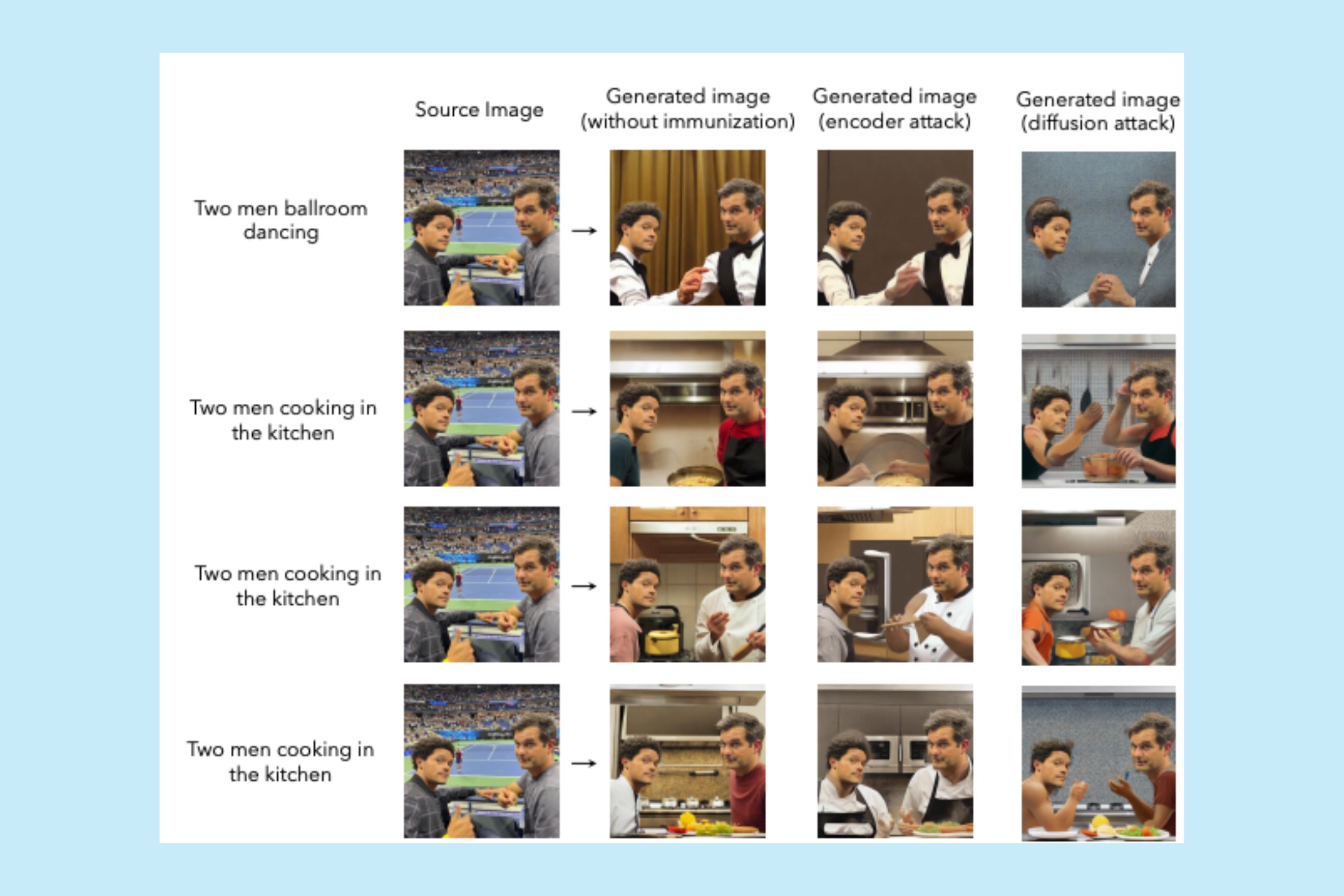

Source: MIT Research

Source: MIT Research

If you’re like me, I’m sure you have recently seen a video or picture and you could not tell if it's real or fake.

With the powerful generative AI systems that are now available, the lines are being blurred and it’s not easy to tell what is real and what's not.

If someone has your picture they can modify it and place it anywhere they want to.

Researchers at MIT have created a new tool to try to prevent this.

Over the past few years, it has been a struggle for technology companies to deal with disinformation and now with all these AI tools that can alter images or add speech to still Images, the problem is exacerbated. As things stand anyone can take an image and modify it to maybe push an evil agenda, or to influence people in an election.

I welcome Innovations like Photogaurd, lately I myself have been wondering how I protect myself and also the people around me that do not understand the power of these applications that alter photos and are under the impression that everything they see is real especially the older generation

At the speed that technology is moving, it has become important to act fast to prevent any potential threats of AI-powered manipulation.

According to MIT, The technique that PhotoGaurd uses is a complementary technique to watermarking,

The idea is to stop people from actually even starting to alter images in the first place.

Watermarking uses similar techniques but it allows people to only detect AI content after it has already been made.

The techniques that are used by the team from MIT are called encoder attack and diffusion attack.

The first technique Photogaurd adds imperceptible signals to the Image so that. an AI model will interpret it as something else.

The second model actually which is said to be the most effective, actually stops AI models from generating Images.

The combination of these two would as a result stop these models from creating Images.

Tools like Photogaurd are much needed in society today and I am looking forward to seeing the progress. While large companies like Google and openAI have made pledges to develop methods to prevent fraud and deception. We also need other companies to be a part of creating new solutions.

References

https://arxiv.org/pdf/2302.06588.pdf

https://www.technologyreview.com/

https://www.whitehouse.gov/briefing-room/statements-releases/2023/07/21/fact-sheet-biden-harris-administration-secures-voluntary-commitments-from-leading-artificial-intelligence-companies-to-manage-the-risks-posed-by-ai/

https://www.technologyreview.com/2023/07/26/1076764/this-new-tool-could-protect-your-pictures-from-ai-manipulation/?utm_source=LinkedIn&utm_medium=tr_social&utm_campaign=site_visitor.unpaid.engagement